Social Intelligence & Content Discovery

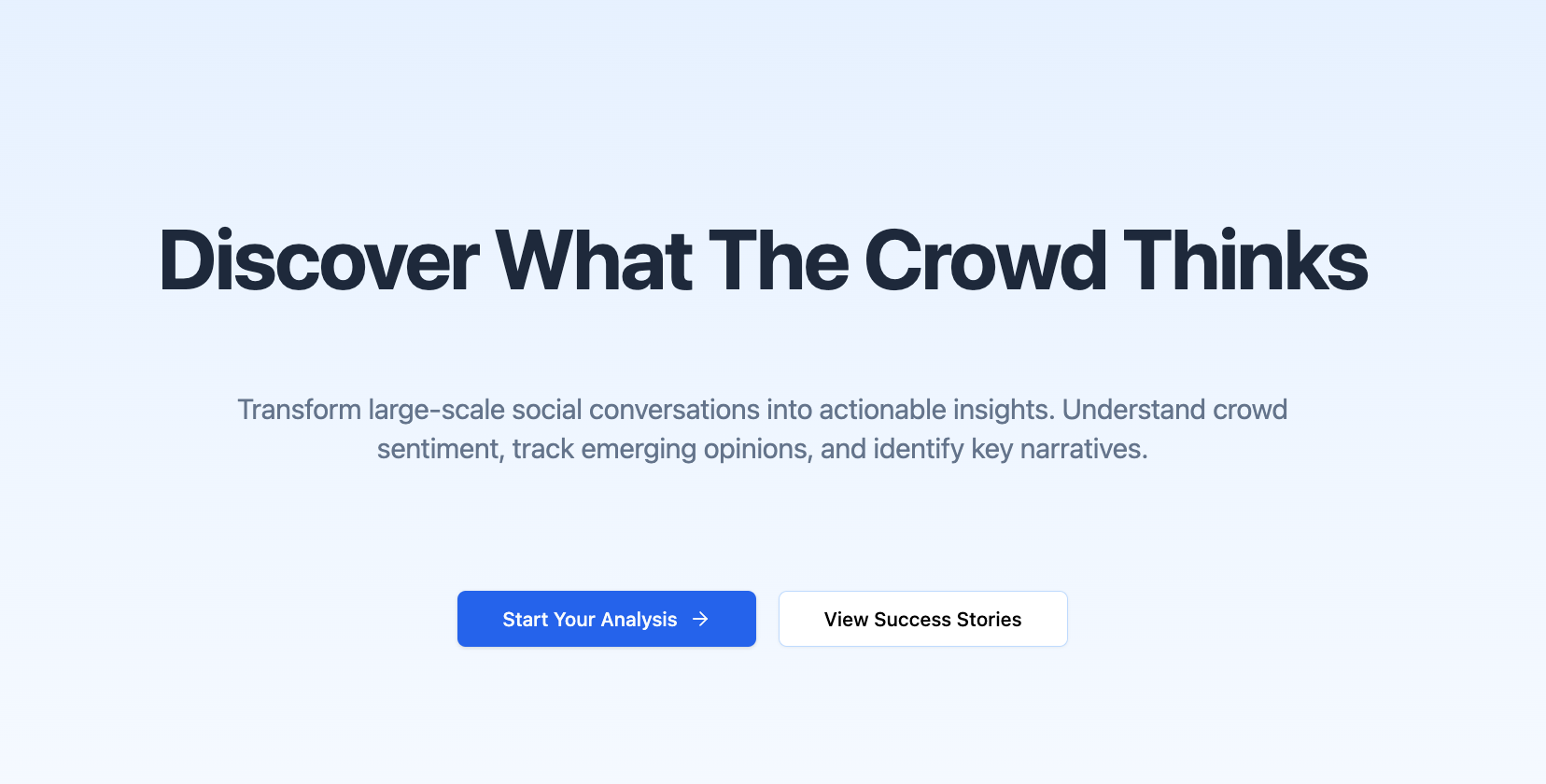

Enhancing personalization in content ecosystems and insights from large-scale social conversations.

Enhancing personalization in content ecosystems and insights from large-scale social conversations.

Enabling personalized learning and knowledge discovery through intelligent AI interfaces.

Building tools for actionable insight and creative multi-modal advertisement content generation.

To view archived content, click here.

May 15, 2025

An analysis of traditional search paradigms and a framework for integrating AI capabilities into content discovery platforms.

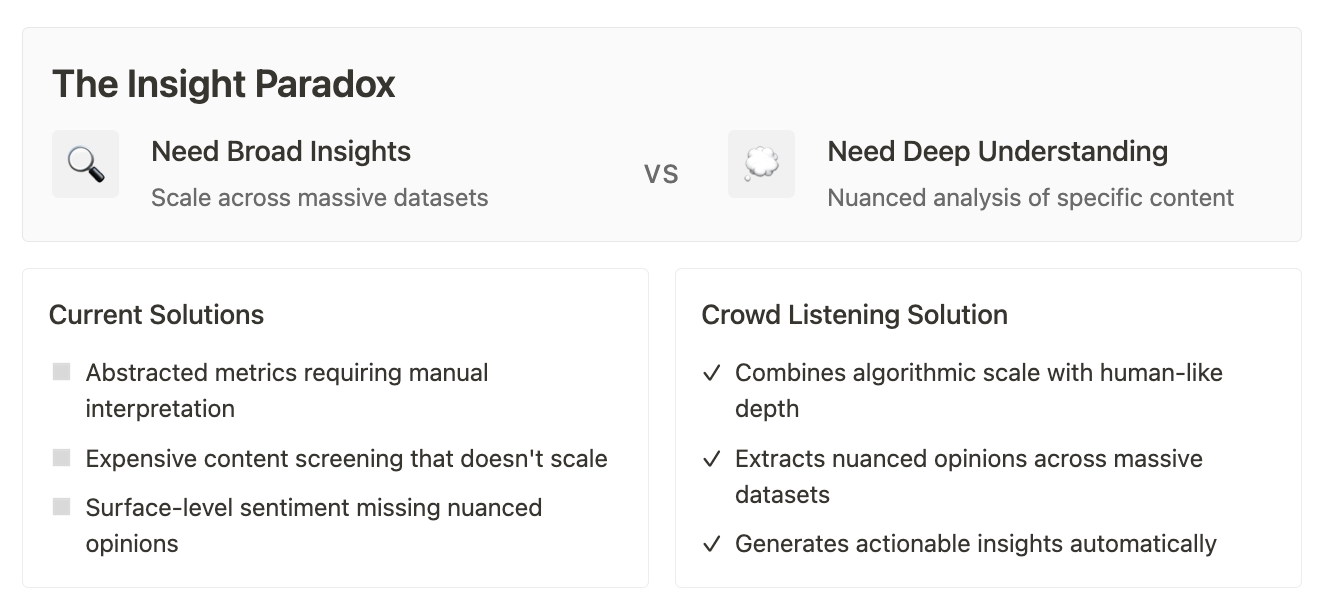

Traditional search paradigms face fundamental limitations in today’s information-rich environment. Users often struggle to articulate their information needs precisely, leading to iterative query refinement and incomplete discovery. Current search systems prioritize keyword matching over intent understanding, resulting in high precision for specific queries but poor recall for exploratory or contextual searches.

The challenge extends beyond technical limitations to cognitive ones. Users don’t always know what they’re looking for until they find it, making traditional query-based search inadequate for discovery scenarios. This creates a significant opportunity for AI-enhanced systems that can understand and anticipate user needs rather than simply matching keywords.

The transformation of search and discovery systems follows a three-stage evolutionary framework that addresses increasingly sophisticated user needs and capabilities. This progression moves from basic content retrieval through enhanced scenario coverage to fundamentally reimagined discovery experiences.

The foundational stage focuses on perfecting core search functionality while establishing the infrastructure for more advanced capabilities. Precise Content Location ensures that users who know exactly what they’re looking for can find it efficiently through refined keyword search that delivers accurate results for specific queries. Experience Completeness enhances user interaction through comprehensive search history that enables users to revisit and build upon previous discoveries, intelligent input prediction that accelerates query formulation, and seamless cross-session continuity that maintains context across multiple search interactions.

Recommendation Integration introduces proactive discovery elements that complement traditional search, including contextual suggestions that surface relevant content based on current search behavior, curated entry points that guide users toward high-quality content collections, and algorithmic recommendations that leverage user patterns to suggest potentially valuable discoveries.

The expansion stage addresses the limitations of traditional search by supporting more nuanced and complex user needs. Fuzzy Requirements Support enables the system to interpret and respond to ambiguous or incomplete user expressions through natural language processing that understands intent beyond keywords, semantic matching that connects user needs with relevant content even when terminology differs, and contextual interpretation that considers the user’s situation and background when generating responses.

Personalization with Transparency creates tailored experiences while maintaining user understanding and control. The system provides clear reasoning for recommendations that explains why specific content appears relevant, develops adaptive algorithms that learn from user interactions while respecting privacy preferences, and implements granular search capabilities that support both high-level topic exploration and detailed, specific content discovery.

Multi-Granularity Discovery supports different levels of content exploration, from individual article or viewpoint-level recommendations that address specific questions or perspectives, to comprehensive collection-level suggestions that provide structured learning paths or thematic exploration opportunities.

The transformation stage represents a fundamental shift from search as retrieval to search as an intelligent creation and curation platform. Knowledge Association and Generation enables the system to synthesize personalized content that matches individual consumption preferences, connecting disparate information sources into coherent, customized presentations that serve specific user needs and contexts.

Adaptive Content Matching ensures that discovered or generated content aligns with current user capabilities and preferences, including difficulty calibration that presents information at appropriate complexity levels, modality optimization that delivers content in formats best suited to user preferences and contexts, and contextual relevance that considers the user’s immediate goals and longer-term interests.

Dynamic Content Creation addresses gaps in existing content through real-time synthesis that generates answers or explanations when suitable existing content cannot be found, asynchronous content development that creates comprehensive resources for recurring user needs, and collaborative generation that combines AI capabilities with human expertise to produce high-quality, tailored content.

This evolutionary framework recognizes that modern search must progress beyond simple information retrieval to become an intelligent partner in knowledge discovery, learning, and content creation. Each stage builds upon the previous one while introducing fundamentally new capabilities that expand what users can accomplish through search interactions.

| Platform Type | Strengths | Weaknesses |

|---|---|---|

| Traditional Search Engines | Comprehensive indexing, fast retrieval, established user behavior patterns | Limited contextual understanding, poor handling of ambiguous queries, difficulty with exploratory search |

| Recommendation Systems | Personalization capabilities, learning from user behavior, serendipitous discovery | Filter bubble effects, cold start problems, limited transparency |

| AI-Enhanced Platforms | Natural language understanding, conversational interfaces, contextual awareness | Computational costs, accuracy concerns, user trust issues |

The AI integration framework follows a three-phase approach designed to incrementally enhance search capabilities while maintaining user trust and system reliability.

The foundation phase implements LLM-powered query understanding that can interpret ambiguous or incomplete queries, transforming user intent into actionable search parameters. This includes suggesting query refinements and alternatives based on contextual understanding, extracting clear intent from natural language descriptions, and providing contextual query expansion that broadens search scope while maintaining relevance. This phase establishes the groundwork for more sophisticated AI interactions while delivering immediate value to users struggling with traditional keyword-based search limitations.

Building on enhanced query understanding, the content synthesis phase develops advanced generation capabilities that summarize multiple sources into coherent overviews, enabling users to quickly grasp complex topics from diverse perspectives. The system generates comparative analyses across different viewpoints, creates personalized explanations based on user background and expertise level, and synthesizes comprehensive answers from distributed information sources. This phase transforms search from simple information retrieval into intelligent content curation that adapts to individual user needs and contexts.

The final phase creates interactive discovery experiences that fundamentally change how users explore information. This includes sophisticated follow-up question generation that guides users toward deeper understanding, exploratory conversation flows that encourage serendipitous discovery, contextual recommendations that surface related concepts and ideas, and learning path suggestions that create structured journeys through complex domains. This phase represents the full realization of AI-enhanced search, where the system becomes a collaborative partner in knowledge discovery rather than simply a retrieval tool.

Effective AI-enhanced search interfaces must design for complexity while maintaining simplicity. The progressive disclosure approach starts with simple, high-level answers that provide immediate value while indicating deeper information availability. Clear pathways to more detailed information allow users to drill down based on their specific needs and interests. Topic exploration through related concepts creates natural discovery paths that expand user understanding beyond their original query. Supporting both focused and exploratory search modes ensures the interface adapts to different user intents and discovery styles.

Modern search interfaces benefit from integrating chat-like interactions that feel natural and intuitive. Contextual follow-up questions guide users toward more specific or broader explorations based on their demonstrated interests. Maintaining conversation history and context enables the system to build understanding over multiple interactions, creating more personalized and relevant responses. Clarification and refinement capabilities allow users to iteratively improve search results through natural dialogue. Multi-turn information gathering transforms search from isolated queries into collaborative knowledge-building sessions.

Successful AI search systems must balance personalization benefits with user privacy and control concerns. Learning from interaction patterns rather than explicit profiling reduces user burden while building sophisticated understanding of preferences and needs. Transparency into personalization decisions builds user trust by explaining why certain results or recommendations appear. User control over recommendation intensity allows individuals to adjust the system’s proactive behavior based on their current goals and contexts. Balancing novelty with relevance ensures that personalization enhances rather than constrains discovery opportunities.

The most effective AI-enhanced search systems combine traditional infrastructure with advanced AI capabilities rather than replacing existing systems entirely. Maintaining fast keyword-based retrieval for precise queries ensures excellent performance for straightforward information needs. Layering AI enhancement for ambiguous or exploratory searches adds intelligence where it provides the most value. Implementing fallback mechanisms for AI system failures ensures reliability and user trust. Optimizing for both speed and understanding creates systems that excel across diverse use cases and user needs.

Semantic search capabilities through vector embedding technology enable understanding of meaning beyond keyword matching. Document embedding for content similarity allows the system to find conceptually related information even when specific terminology differs. Query embedding for intent matching translates user needs into semantic space for more accurate retrieval. Contextual embedding updates based on user interaction enable the system to learn and adapt over time. Multi-modal embedding for diverse content types creates unified search experiences across text, images, audio, and other media formats.

Adaptive systems that improve through use represent a key advantage of AI-enhanced search platforms. Capturing implicit feedback from user interactions provides rich signals about content quality and relevance without requiring explicit user effort. Updating recommendations based on session context enables dynamic adaptation to evolving user needs within single search sessions. Learning from successful discovery patterns helps the system recognize and replicate effective search strategies. Adapting to changing user needs over time ensures long-term relevance and value as user interests and expertise evolve.

Maintaining content quality in AI-enhanced systems requires comprehensive validation approaches. Source attribution and verification ensure that generated content maintains clear provenance and accuracy standards. Fact-checking against authoritative sources provides additional validation layers for critical information. Confidence scoring for generated responses helps users understand the reliability of AI-generated content. Human review workflows for critical information ensure that important decisions receive appropriate oversight and validation.

Measuring the effectiveness of AI-enhanced discovery requires sophisticated assessment approaches beyond traditional engagement metrics. Task completion rate analysis reveals whether users successfully achieve their information goals. User satisfaction with discovery outcomes provides direct feedback about system value and effectiveness. Time-to-insight metrics measure how quickly users can find valuable information and understanding. Exploration depth and breadth measurement assesses whether the system successfully encourages beneficial discovery behaviors.

AI systems require ongoing monitoring to ensure fair and equitable information access. Search result ranking and selection algorithms must be regularly audited for potential biases that could disadvantage certain topics, perspectives, or user groups. Content recommendation algorithms need continuous evaluation to prevent filter bubble effects that limit user exposure to diverse viewpoints. Personalization filter effects require monitoring to ensure that customization enhances rather than constrains user discovery opportunities. Representation across different user groups must be regularly assessed to ensure equitable access and value delivery.

| Quarter | Focus Area | Key Deliverables |

|---|---|---|

| Q1: Foundation | Basic AI Integration | LLM query enhancement, semantic search deployment, content quality frameworks, pilot user testing |

| Q2: Enhancement | Advanced Features | Conversational discovery, personalization algorithms, content synthesis capabilities, infrastructure scaling |

| Q3: Optimization | Data-Driven Improvement | Algorithm refinement, UI enhancement, advanced quality assurance, expanded content integration |

| Q4: Scale | Full Deployment | Complete AI-enhanced search, comprehensive testing, advanced analytics, next-generation planning |

An intelligent book search system that understands your needs and provides personalized recommendations using GPT-4o.

Traditional book discovery suffers from several fundamental limitations that prevent readers from finding content that truly resonates with their needs and interests. Conventional search relies on exact keyword matches, failing to understand the nuanced intent behind user queries and missing opportunities for meaningful discovery. Most systems provide broad, one-size-fits-all suggestions without considering the user’s specific emotional state, learning goals, or contextual needs, resulting in generic recommendations that often miss the mark.

People frequently remember books by fragments rather than complete details, recalling a powerful quote, a character trait, or an emotional impact but struggling to translate these memories into findable search terms. Current systems cannot distinguish between fundamentally different user intents, such as needing practical advice versus wanting to explore ideas versus seeking emotional catharsis, leading to misaligned recommendations that frustrate rather than inspire.

AI Book Search represents a paradigm shift from information retrieval to intelligent content curation. Instead of searching for books, users can now search through their intentions, memories, and needs. The system acts as a knowledgeable librarian who understands not just what you’re asking, but why you’re asking and how to help you discover content that truly resonates with your current situation and interests.

The breakthrough lies in intent-aware content discovery that combines semantic understanding with contextual curation. The AI interprets the deeper meaning behind queries, not just surface keywords, enabling understanding of complex, nuanced requests. Recommendations adapt to user intent categories, providing contextually relevant content that matches both explicit needs and implicit desires.

Memory-based discovery allows users to search using fragments of memory, including quotes, character descriptions, and plot elements, transforming partial recollections into successful book discoveries. Progressive learning journeys ensure that content cards form logical progressions from foundational to advanced concepts, supporting sustained learning and exploration. Multi-modal content integration seamlessly combines books, podcasts, articles, and quotes into cohesive recommendations that provide comprehensive coverage of user interests.

The system aims to dramatically reduce discovery friction, transforming the typical 15+ minute browsing session into instant, relevant recommendations that immediately connect with user needs. Enhanced learning outcomes result from curated progressions that build knowledge systematically rather than randomly. Emotional resonance ensures that content matches not just intellectual interests but emotional and contextual needs, creating more meaningful reading experiences. Democratizing expert curation provides every user with personalized librarian-level guidance, regardless of their access to professional recommendation services.

Rather than retrofitting AI onto traditional search, the system builds entirely around LLM capabilities to create fundamentally new discovery experiences. Query understanding through GPT-4o analyzes user intent before any content retrieval, ensuring that recommendations address actual needs rather than surface keyword matches. Contextual generation creates content cards based on analyzed intent rather than pre-stored data, enabling dynamic responses that adapt to specific user contexts.

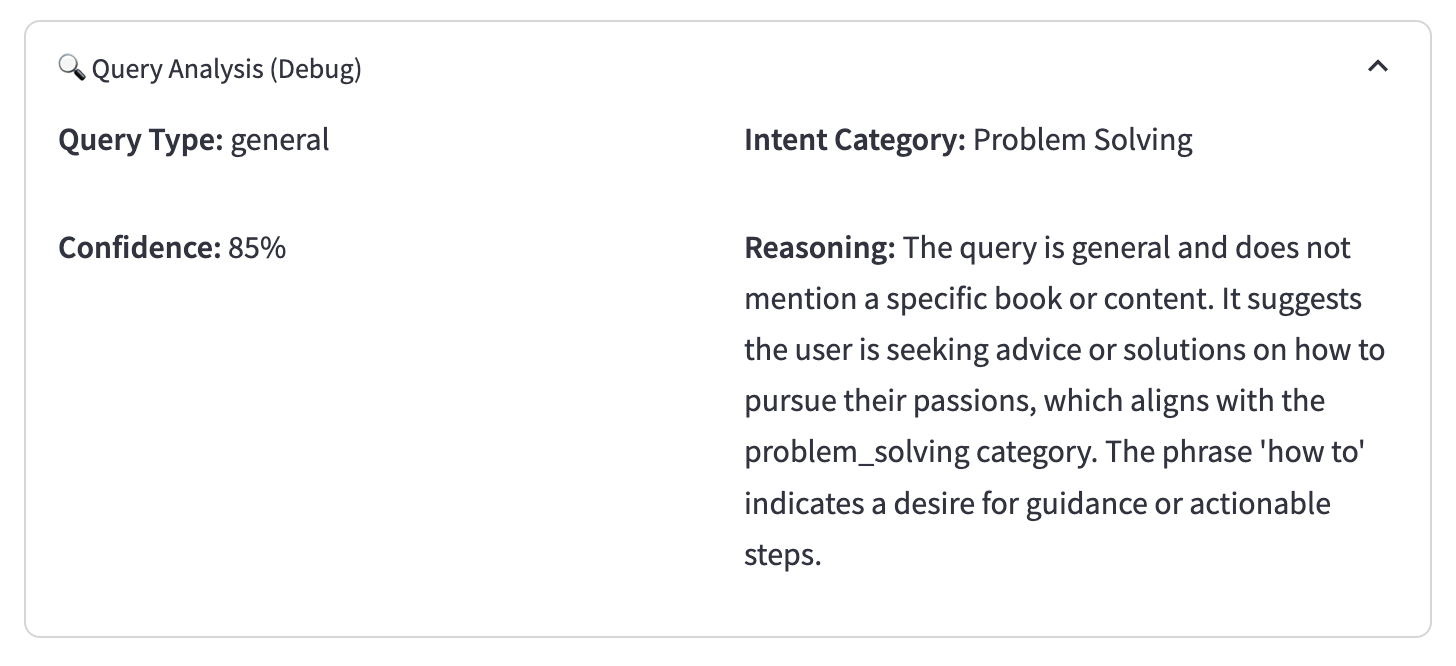

Progressive disclosure reveals information in logical layers, moving from intent analysis through content generation to card rendering. Simple queries receive focused responses while complex topics get comprehensive exploration, ensuring appropriate depth without overwhelming users. Debug mode availability for developers supports system understanding and continuous improvement of the AI decision-making process.

Modular component design ensures that each system element has a single responsibility, creating maintainable and extensible architecture. Type-safe data structures using Pydantic provide reliable data handling, while isolated AI interactions with fallback handling ensure system resilience. Reusable, stateless rendering functions support consistent user experience, and pure orchestration between components maintains clean system architecture.

The core technology stack centers on Streamlit for rapid prototyping and clean UI development, enabling quick iteration and user testing. OpenAI GPT-4o provides sophisticated query analysis and content generation capabilities that understand nuanced user intent. Pydantic ensures type-safe JSON processing that maintains data integrity throughout the system. Design for Streamlit Cloud deployment with environment variable configuration supports scalable hosting and easy maintenance.

Key technical decisions prioritize AI-generated content over database search to enable understanding of nuanced, contextual queries that wouldn’t match traditional database keywords. This approach allows creative, synthesized recommendations that combine multiple sources while eliminating the need for massive content databases in the prototype phase. The system provides immediate value without complex data ingestion pipelines, though this creates trade-offs with less precision for specific book details while providing higher relevance for intent-based discovery.

Intent-category classification into seven predefined categories before content generation provides structured understanding of user needs and enables category-specific prompt engineering for better results. This creates predictable, testable system behavior while allowing targeted improvements per intent type. Implementation through a two-stage LLM pipeline with structured JSON responses ensures reliable and interpretable results.

Dynamic card generation produces one to five content cards based on query complexity, ensuring that simple queries receive focused, direct answers while complex topics benefit from multi-perspective exploration. This approach avoids overwhelming users with irrelevant content while creating natural learning progressions that support sustained engagement.

Component-based UI design builds interfaces from reusable, type-specific content cards that support diverse content types including books, podcasts, quotes, and themes. This enables consistent styling across different recommendation types while facilitating easy extension for new content types and providing better responsive design capabilities.

The data flow follows a clear progression from user query through intent analysis to content generation and finally UI rendering. Raw user queries captured via Streamlit undergo GPT-4o analysis that classifies query type and intent category. Category-specific prompts then generate relevant content cards, with Pydantic models ensuring type safety and structure. Dynamic card components render based on content type, while graceful fallbacks at each stage maintain system reliability even when individual components encounter issues.

The system includes sophisticated specific book detection that identifies when users ask about particular titles, enabling targeted responses with detailed information about known works. Intent classification categorizes general queries into seven distinct types that cover the spectrum of user needs and discovery patterns.

Problem-solving queries like “How to deal with difficult colleagues” receive practical, actionable recommendations focused on applicable knowledge and skills. Exploration and discovery requests such as “Mind-bending science books (not too technical)” generate curated selections that balance intellectual challenge with accessibility. Quote and concept memory searches like “Books about ‘flow state’” help users rediscover influential ideas and their sources.

Plot fragment memory searches such as “Book with a girl counting prime numbers” transform partial story recollections into successful identifications. Character and scene description queries like “London autistic detective” leverage memorable details to find specific works. Emotional and thematic searches such as “Books that will make me cry (in a good way)” connect users with content that matches their desired emotional experience. Comparative searches like “Like Harry Potter but for adults” help users find similar works that match their established preferences while introducing new elements.

Responsive card design adapts to different content types with distinct visual approaches for quotes, summaries, recommendations, and themes. Book recommendation cards provide rich information including relevance scores and detailed reasoning that helps users understand why specific books match their needs. Quote cards feature special formatting with book attribution and page numbers that enable easy reference and verification. Work-in-progress components with preview data demonstrate planned features and gather user feedback for future development.

Real GPT-4o integration ensures sophisticated query analysis and content generation that understands complex user intent and context. Structured JSON responses rendered into beautiful UI components provide consistent, reliable user experiences. Fallback handling for API errors ensures system reliability even when external services encounter issues. Debug mode enables understanding of query analysis processes, supporting system improvement and user education about AI decision-making.

The system employs flexible data models including QueryType classifications for specific books versus general queries, UserIntentCategory definitions covering seven predefined categories, and ContentCard systems with type-specific rendering capabilities. BookRecommendation models provide rich information with reasoning, while PlaceholderFeature components support future development.

API integration utilizes OpenAI GPT-4o for all AI processing with structured JSON responses and Pydantic validation ensuring data integrity. Error handling and fallback responses maintain system reliability, while temperature controls optimize output for different use cases and user needs.

User interface features include custom CSS styling with gradients and animations that create engaging visual experiences. Responsive card layouts adapt to various screen sizes and content types. Debug mode supports development and troubleshooting, while comprehensive loading states and error handling ensure smooth user experiences even when systems encounter difficulties.

Planned enhancements include semantic vector search capabilities that leverage advanced embedding-based approaches for even more sophisticated content discovery. User preference learning from search history will enable increasingly personalized recommendations that improve over time. Book database integration will provide access to real book data and availability information, enhancing the practical utility of recommendations.

Social features will enable sharing and collaborative recommendations, creating community-driven discovery experiences. Mobile optimization through progressive web app features will extend access across devices and usage contexts. The modular architecture supports continuous enhancement and experimentation with new recommendation techniques while maintaining stable, high-quality user experiences.

The system represents a foundation for increasingly sophisticated content discovery applications that understand and serve individual user needs through intelligent, adaptive technology. As user data accumulates and understanding of content consumption patterns improves, the system will evolve to provide even more valuable and relevant discovery experiences that genuinely enhance rather than simply automate the content discovery process.

October 5, 2024

We live in an age of information abundance, yet many of us struggle with two fundamental learning challenges: we don’t know what to read, and we don’t understand what we’ve read. These pain points—“not knowing how to choose” and “not knowing how to comprehend”—represent a massive opportunity for reimagining how we interact with knowledge.

The core insight driving next-generation learning interfaces is simple but profound: most people don’t know what they don’t know. We can’t formulate good questions about topics we’re unfamiliar with, yet traditional learning systems expect us to do exactly that. This creates a barrier that conversational AI can uniquely solve by flipping the interaction model entirely.

Google’s Learn About product offers a compelling glimpse of this future. Unlike traditional search, which requires users to know what to look for, Learn About allows users to “zoom out and look at the space of questions around your question.” It combines the information accuracy of search with the flexible, dynamic interaction of AI chat, creating an exploratory learning experience that goes far beyond simple Q&A.

This approach represents a fundamental shift from information retrieval to knowledge discovery. Instead of returning static results, the system actively helps users explore adjacent concepts and ask better questions. Users can pursue their immediate curiosity while simultaneously discovering related topics they never thought to investigate.

The most innovative learning interfaces take this concept further by specializing in specific domains. Rather than trying to handle all possible queries, they focus on particular knowledge areas—like literature, technical documentation, or professional development—where they can provide genuinely superior experiences compared to general-purpose tools.

The technical foundation of these systems relies on sophisticated prompt engineering and modular content generation. Large language models serve as the cognitive engine, but their raw output must be carefully structured to create coherent learning experiences. The key innovation lies in using LLMs to generate JSON-formatted responses that populate predefined UI templates, creating consistent yet dynamic interfaces.

This architecture allows the system to maintain conversational flow while presenting information in learner-friendly formats. For example, instead of generating wall-of-text responses, the LLM outputs structured data that renders as interactive cards, related questions, and exploration pathways. Each response includes not just content, but also suggested next steps and connection points to related topics.

The prompt engineering becomes crucial here. Effective systems use detailed behavioral instructions that guide the LLM to act as a knowledgeable teacher rather than a simple question-answering service. These prompts specify tone, content depth, interaction style, and response structure, ensuring consistency across thousands of potential learning conversations.

Traditional learning interfaces suffer from what could be called “prompt friction”—the cognitive overhead of formulating good questions and organizing complex thoughts into text. The most successful knowledge interfaces minimize this friction through several design strategies.

First, they embed potential questions directly into content responses. Instead of requiring users to think of follow-up questions, the system generates three or four relevant next steps that users can explore with a simple click. This transforms learning from an active questioning process into a guided exploration where curiosity can flow naturally.

Second, they use modular response formats that pack high knowledge density into digestible chunks. Rather than lengthy explanations, responses combine concise answers with interactive elements: reflection prompts, knowledge checks, relevance connections, and vocabulary builders. Users receive exactly the information they need while being invited to go deeper on specific aspects that interest them.

Third, they implement “prompt prefills”—pre-written questions and conversation starters that help users begin productive dialogues without staring at blank input fields. These aren’t generic suggestions but contextually relevant questions based on the current topic and common learning patterns.

Unlike traditional recommendation systems that rely on explicit preferences or behavioral tracking, conversational learning interfaces build user understanding organically through dialogue. Each interaction reveals information about the user’s background knowledge, interests, learning goals, and preferred depth of explanation.

This conversational profiling enables increasingly sophisticated personalization. The system learns whether a user prefers concrete examples or abstract concepts, detailed explanations or high-level overviews, historical context or contemporary applications. Over time, responses become naturally calibrated to individual learning styles and knowledge levels.

The personalization extends beyond content delivery to include book recommendations, topic suggestions, and learning path optimization. By understanding what concepts a user struggles with and what types of explanations resonate, the system can proactively surface relevant material and adapt its teaching approach in real-time.

Behind the conversational interface lies a sophisticated knowledge management system. Rather than relying solely on LLM training data, effective learning platforms implement Retrieval-Augmented Generation (RAG) architectures that combine real-time information retrieval with language generation.

This approach proves particularly valuable for specialized domains like literature analysis, where high-quality, curated knowledge sources significantly improve response accuracy and depth. Systems can draw from structured databases of book analyses, expert commentary, reader discussions, and academic sources to provide richer, more authoritative answers than general-purpose models alone.

The challenge lies in balancing different information sources. Community discussions from platforms like Reddit offer authentic reader perspectives and common questions, while academic sources provide authoritative analysis. Professional reviews and curated summaries add editorial quality. Effective systems learn to synthesize these different knowledge types based on the specific question and user context.

Traditional educational metrics often miss the point of exploratory learning. While engagement metrics like session length and click-through rates provide some insight, the real value lies in knowledge acquisition and curiosity development. The most meaningful measures focus on learning outcomes: Do users ask better questions over time? Do they make novel connections between concepts? Do they pursue deeper investigation of topics that initially seemed uninteresting?

Advanced systems track conversation quality through several indicators: the progression from basic to sophisticated questions, the frequency of cross-topic connections, the depth of follow-up exploration, and user-generated insights that suggest genuine understanding. These metrics help optimize not just for engagement, but for actual learning effectiveness.

As these interfaces mature, they point toward a fundamental transformation in how we approach knowledge work. Instead of consuming information passively, we’ll increasingly collaborate with AI systems to explore ideas, test understanding, and discover unexpected connections. The goal isn’t to replace human thinking but to augment it with better tools for curiosity and exploration.

The most promising applications extend beyond individual learning to collaborative knowledge building. Imagine research environments where teams can explore complex topics together, with AI facilitators helping surface relevant connections, identify knowledge gaps, and guide productive discussions. Or educational settings where students learn not just facts but how to ask increasingly sophisticated questions about any domain.

The technical foundation already exists. The remaining challenge is design: creating interfaces that feel natural, educational experiences that genuinely improve understanding, and systems that scale personalized learning without losing the human touch that makes great teaching transformative.

The next time you encounter a complex topic, imagine having a knowledgeable guide who not only answers your questions but helps you discover the questions you didn’t know to ask. That’s the promise of intelligent knowledge interfaces—and it’s closer than you might think.

September 20, 2024

In the rapidly evolving landscape of artificial intelligence, we’re witnessing a fundamental shift in how we consume and interact with knowledge. While early AI applications focused primarily on content summarization and modal conversion, the next generation of AI-native products promises something far more transformative: the ability to create truly personalized learning experiences that adapt to individual needs, interests, and cognitive patterns.

Today’s AI content tools largely excel at taking existing information and reformatting it into different modalities. We can convert text to audio, create video summaries, or generate podcast-style conversations from written material. However, as Large Language Model context windows continue to expand, simple content summarization becomes increasingly commoditized. The real value lies not in these mechanical transformations, but in the creative synthesis and novel perspectives that emerge when AI systems understand both the content and the consumer.

The essence of creativity lies in finding fresh angles of approach. Quality content distinguishes itself through novel perspectives, clear structure, and genuine utility to the reader. As we move beyond basic summarization, the challenge becomes how to help AI systems discover these unique entry points that make content both engaging and personally relevant.

We’re entering an era of “knowledge liquefaction” where any piece of information can be rapidly transformed into formats that match specific consumption scenarios. Whether someone needs structured learning materials for deep study or fragmentary content for casual listening during commutes, AI systems can now adapt the same core knowledge to fit these different contexts seamlessly.

This capability extends far beyond simple format conversion. The most compelling applications combine high-quality human-created content with AI’s ability to find unexpected connections and generate personalized frameworks. Rather than replacing human creativity, these systems amplify it by identifying patterns and relationships that might not be immediately obvious, then presenting them through personalized lenses that resonate with individual users.

Creating truly personalized content presents a fundamental tension between scale and customization. If every piece of content requires individual adaptation for each user, the costs become prohibitive. However, knowledge fusion offers a solution through its inherent modularity. Many elements of content remain constant across audiences—core concepts, fundamental principles, and essential facts—while the variable elements involve how these concepts connect to individual interests, goals, and existing knowledge.

The key insight is that personalization doesn’t require generating entirely new content for each user. Instead, it involves intelligent selection and combination of existing content elements, supplemented by targeted customization that creates meaningful connections to the user’s specific context and needs.

Modern AI systems have unprecedented access to rich user interaction data through natural language conversations, reading highlights, and behavioral patterns. Unlike traditional recommendation systems that rely primarily on click-through data, AI-native platforms can analyze the semantic content of user queries, the topics they explore, and the questions they ask to build sophisticated models of their interests and learning preferences.

This approach moves beyond simple topic matching to understand cognitive patterns and learning styles. For example, the system might recognize that one user prefers concrete examples and case studies, while another gravitates toward theoretical frameworks and abstract principles. These insights enable the generation of content that not only covers relevant topics but presents them in ways that align with how each individual processes and retains information.

The most sophisticated AI-native learning platforms implement memory architectures inspired by human cognition, incorporating episodic memory for recent interactions, semantic memory for abstracted patterns, and procedural memory for learned preferences and behaviors. This multi-layered approach enables systems to maintain context over time while continuously refining their understanding of user needs.

Rather than treating each interaction as isolated, these systems build cumulative knowledge about user interests, expertise levels, and learning goals. They can recognize when someone is exploring a new domain versus deepening existing knowledge, and adjust their content generation accordingly. This longitudinal understanding becomes increasingly valuable as it enables the system to suggest unexpected but relevant connections between seemingly disparate areas of interest.

The ultimate vision extends beyond personalized recommendation to adaptive content creation. Imagine a system that can take a classic work like Sun Tzu’s “The Art of War” and generate multiple interpretations tailored to different audiences and applications. For a business professional, it might emphasize strategic planning and competitive analysis. For a parent, it could explore family dynamics and conflict resolution. For a student, it might focus on historical context and philosophical implications.

Each version would maintain the core insights of the original work while presenting them through frameworks and examples that resonate with the specific audience. This approach recognizes that great ideas have universal applicability, but their accessibility depends heavily on how they’re presented and contextualized.

Building these capabilities requires sophisticated orchestration of multiple AI systems working in concert. Content generation engines must work alongside user modeling systems, recommendation algorithms, and quality control mechanisms. The challenge lies not just in generating personalized content, but in ensuring it maintains accuracy, coherence, and genuine value while adapting to individual preferences.

Recent advances in multimodal AI and agent-based architectures provide the technical foundation for these applications. Tools like MCP (Model Context Protocol) servers enable modular, composable AI capabilities that can be combined and recombined to address specific user needs. This architectural approach allows for the kind of flexible, adaptive content generation that personalized learning requires.

As we look toward the future of AI-native learning platforms, the focus shifts from simple automation of existing processes to the creation of entirely new forms of educational experience. The most successful applications will be those that understand the deep relationship between content, context, and individual cognition, using this understanding to create learning experiences that are not just personalized, but genuinely transformative.

The transition from traditional content consumption to AI-enhanced learning represents more than a technological upgrade. It’s a fundamental reimagining of how knowledge can be packaged, presented, and absorbed in ways that honor both the richness of human understanding and the unique cognitive patterns of individual learners. In this future, every question becomes an opportunity for personalized exploration, and every piece of content becomes a starting point for deeper, more meaningful engagement with ideas.

August 15, 2024

Authors: Terry Chen, Allyson Lee

Effective coaching in project-based learning environments is critical for developing students’ self-regulation skills, yet scaling high-quality coaching remains a challenge. This paper presents an LLM-enhanced coaching system designed to support project-based learning by helping connect peers struggling with the same regulation gap, and to help coaches by identifying regulation gaps and generating tailored practice suggestions. Our system integrates vector-based semantic matching with LLM-generated regulation gap categorizations for Context Assessment Plan (CAP) notes. Results demonstrate that our system effectively retrieves relevant coaching cases, reducing the cognitive burden on mentors while maintaining high-quality, context-aware feedback.

Training college students to tackle complex, open-ended innovation work requires developing strong regulation skills for self-directed work. Coaches guide the development of these regulation skills, helping students develop cognitive, motivational, emotional, and strategic behaviors needed to problem solve and reach desired outcomes. However, coaches face significant challenges in providing personalized guidance to multiple student teams.

Existing AI-based project management tools help track tasks but fail to capture nuanced ways students approach their work. Large Language Models (LLMs) show promise in analyzing text-based interactions and generating structured feedback, but their application to coaching remains underexplored.

To address these issues, we propose utilizing LLMs to develop and integrate three key technical innovations. First, Peer Connections facilitate connections between students with similar challenges. Second, Coaching Reflections help coaches analyze patterns and improve their practice through identifying regulation gaps. Finally, Practice Suggestions adapt similar cases to new situations.

Our system is built around a novel codebook consisting of regulation gap definitions and examples gathered across learning science literature. The codebook categorizes student regulation gaps in a tiered approach:

Our codebook includes three primary categories. Cognitive skills relate to approaching problems with unknown answers. Metacognitive skills involve planning, help-seeking, collaboration, and reflection. Emotional aspects cover dispositions toward self and learning that affect motivation.

The tier 2 categories provide more specific regulation gaps. These include representing problem and solution spaces, assessing risks, and critical thinking and argumentation. Additionally, they cover forming feasible plans, planning effective iterations, addressing fears and anxieties, and embracing challenges and learning.

Our system combines semantic similarity search with LLM-based analysis in a retrieval-augmented generation approach. The process begins when student regulation notes are pre-processed with metadata on tier 1 and tier 2 regulation gaps. These notes are then encoded into text embeddings, after which a vector database retrieves the most similar historical cases. Finally, an LLM (Deepseek) generates structured responses including diagnosis of potential regulation gaps, practice suggestions targeted to these gaps, and references to similar historical cases.

This grounds LLM suggestions in actual coaching experiences rather than generic advice, improving the relevance and actionability of recommendations.

We developed and tested three approaches to match students with similar regulation challenges:

The Baseline Semantic Approach uses vector embeddings to find similar cases based on textual similarity. The Weighted Semantic Similarity approach separates and weights regulation gap description (0.7) from contextual information (0.3). Our Hybrid LLM-Codebook Approach combines semantic matching with LLM-generated metadata using our regulation codebook.

The hybrid approach proved most effective, assigning the highest weight (0.5) to tier 2 categories and lower weights to tier 1 categories (0.1) and text content (0.2 each for gap text and context).

We evaluated each model against the same three notes, analyzing the top 5 returned similar notes. The semantic matching performed well when addressing cognitive and metacognitive gaps with repetitive terminology but struggled with emotional regulation gaps. The LLM-codebook approach showed promise in accurately identifying regulation gaps but was computationally intensive. The hybrid model consistently and efficiently identified notes with the same regulation gap while maintaining contextual similarity.

Our system effectively bridges the gap between human expertise and AI capabilities in coaching contexts. Key takeaways include Hybrid AI-Driven Case Retrieval, where combining LLM-driven metadata tagging with traditional semantic matching enables precision in retrieving relevant coaching cases, and Structured Codebooks for Domain-Specific AI, where our tiered classification system grounds LLM-based reasoning in expert-validated pedagogical frameworks.

Future work will focus on several areas of improvement. We plan to improve clarity of writing in notes and collect more data through alternative sources. Additionally, we aim to develop sub-categorized codebooks with specific examples and reasoning chains. Finally, we will explore more sophisticated reasoning methods like external knowledge bases or memory systems.

This research contributes to the broader field of AI-enhanced education and human-AI collaboration, offering insights into how AI can augment expert-driven mentoring in complex, open-ended learning settings.

July 10, 2024

Modern content platforms face a fundamental challenge: how to help users discover relevant, high-quality content that matches their interests while avoiding the trap of information overload. This challenge becomes particularly acute in podcast consumption, where users need to find content that not only aligns with their interests but also fits different consumption contexts and scenarios. An ideal recommendation system addresses these core problems through a sophisticated approach that combines content-based filtering, collaborative filtering, and contextual understanding to create a truly personalized discovery experience.

The core rationale behind the described recommendation system centers on the understanding that effective content discovery requires more than just matching keywords or categories. Users consume content differently based on their current context, mood, and goals. Sometimes they want to explore familiar territory and go deeper into topics they already know and love. Other times, they’re seeking fresh perspectives or entirely new domains to expand their horizons. A truly intelligent recommendation system must understand these nuanced user states and adapt accordingly.

This system addresses three fundamental problems in content discovery. First, the overwhelming volume of available content makes it difficult for users to find what they’re actually looking for. Second, traditional recommendation approaches often create filter bubbles that limit user discovery of potentially valuable content outside their established preferences. Third, most systems fail to account for the contextual nature of content consumption, treating all recommendation scenarios as identical when they’re fundamentally different.

Rather than attempting to build the perfect recommendation system from day one, thi is a phased approach that evolves with our understanding of user behavior and available data. The initial phase focuses on content-based filtering using vector similarity matching, which provides an excellent solution to the cold start problem that plagues many recommendation systems. By representing both content and users as vectors and calculating cosine similarity, we can provide relevant recommendations even for new users with limited behavioral data.

The system’s content modeling process involves sophisticated natural language processing to extract meaningful features from podcast titles, descriptions, and transcripts. We employ a two-level tagging structure that captures both broad categories and specific entities, allowing for precise content categorization while maintaining semantic relationships. This approach enables our system to understand not just what content is about, but how different pieces of content relate to each other conceptually.

As we accumulate user interaction data, the system transitions to collaborative filtering techniques that can discover hidden patterns and relationships between users and content. This phase leverages user-content interaction matrices and applies matrix factorization techniques to identify latent factors that influence user preferences. The collaborative approach excels at uncovering content relationships that might not be obvious from content analysis alone, leading to more sophisticated and accurate recommendations.

The long-term vision encompasses multi-modal personalized recommendations that incorporate contextual factors, user mood, consumption patterns, and environmental considerations. This future state will enable truly intelligent routing between different recommendation strategies based on real-time assessment of user needs and preferences.

Effective personalization requires deep understanding of user preferences, and our system employs both explicit and implicit data collection strategies to build comprehensive user profiles. The explicit data collection process begins with carefully designed onboarding questions that efficiently capture essential user characteristics including age demographics, gender identity, interest areas, and inspirational figures. These questions are designed based on extensive analysis of successful onboarding flows from leading content platforms, optimized to gather maximum signal while minimizing user friction.

The system maps user responses to our content taxonomy through sophisticated attribute mapping. For example, when a user indicates admiration for Steve Jobs, the system automatically associates attributes like innovation, leadership, technology, design, and entrepreneurship with their profile. This mapping approach allows us to infer detailed interest profiles from relatively simple user inputs.

Implicit data collection focuses on user behavior patterns including play duration, completion rates, engagement actions like likes and shares, and negative signals such as skipping or hiding content. These behavioral signals often provide more accurate insight into true user preferences than explicit feedback, as they reflect actual consumption patterns rather than stated preferences. The system applies different weights to various interaction types, recognizing that sharing content represents a much stronger positive signal than simply playing it.

The described recommendation engine employs a sophisticated multi-channel recall strategy that combines different approaches to maximize both relevance and diversity. Vector similarity recall serves as the primary channel, leveraging user profile vectors to find semantically similar content through cosine similarity calculations in our embedding space. This approach excels at discovering content that matches the conceptual themes and topics that resonate with individual users.

Tag-based matching provides a complementary recall channel that ensures precise alignment with explicitly stated user interests. By directly matching user preference tags with content categorizations, this channel guarantees that recommendations include content from areas users have specifically indicated interest in. The tag-based approach offers high precision and interpretability, making it particularly valuable for users who have clear, well-defined interests.

Collaborative filtering recall identifies content enjoyed by users with similar preference patterns, enabling discovery of potentially relevant content that might not be obvious from content analysis alone. This channel is particularly effective at uncovering serendipitous recommendations and helping users discover new areas of interest based on the wisdom of crowds.

The system includes trending content recall as a quality backstop that ensures recommendations always include high-engagement, recent content. This channel serves multiple purposes: it provides a fallback when other channels produce insufficient results, introduces temporal relevance signals, and helps surface content that has demonstrated broad appeal across user segments.

Understanding that users consume content differently based on their current context and goals, our system implements multiple recommendation modes that optimize for different user states and intentions. The default mode provides a balanced approach that combines relevance with exploration, offering users a mix of familiar and novel content that maintains engagement while encouraging discovery.

Fresh mode activates when the system detects user fatigue with current recommendations or when users explicitly seek the latest content. This mode heavily weights recent publications and trending topics, ensuring users stay current with evolving conversations in their areas of interest. The system automatically triggers fresh mode based on behavioral signals like consecutive content skipping or explicit user requests for newer content.

Familiar mode serves users who want to go deeper into established interest areas or seek stable, reliable content experiences. This mode emphasizes content similarity to previously consumed and highly-rated items, helping users build expertise in specific domains. The system may automatically suggest familiar mode for users demonstrating deep engagement with particular topics or content creators.

Explore mode encourages users to venture into new territory and expand their interest horizons. This mode deliberately introduces content from adjacent or entirely new categories, balanced with enough familiar elements to maintain relevance. The system’s explore mode incorporates sophisticated algorithms to identify promising expansion areas based on user’s existing interests and successful exploration patterns from similar users.

Raw similarity-based recommendations often suffer from homogeneity and filter bubble effects that limit user discovery and engagement. Our system addresses these challenges through sophisticated filtering and diversification algorithms that maintain relevance while ensuring recommendation variety. The filtering process removes content users have already consumed unless they’re specifically in familiar mode, and applies quality thresholds based on content ratings and engagement metrics.

Diversification algorithms ensure that recommendation sets include variety across multiple dimensions including content categories, author backgrounds, content length, and topic complexity. The system employs greedy diversification selection that iteratively chooses content to maximize overall set diversity while maintaining individual item relevance. This approach prevents recommendation lists from becoming monotonous while still providing users with content that matches their interests.

The system also implements content quality filters that prioritize well-reviewed, highly-engaged content while giving newer content opportunities to surface based on early engagement signals. These quality mechanisms help maintain user trust in recommendations while supporting content creator discovery.

Modern recommendation systems must continuously evolve based on user feedback and changing preferences. Our system implements real-time feedback processing that immediately incorporates user interactions into the recommendation engine. Different interaction types receive different weight values, with explicit positive actions like sharing carrying more influence than passive consumption metrics.

The system captures detailed interaction data including play duration, completion rates, engagement timing, and skip patterns to understand not just what users like, but how they engage with different types of content. This granular behavioral data enables sophisticated preference modeling that adapts recommendations based on consumption context and user engagement patterns.

When users provide strong positive or negative feedback, the system triggers immediate user vector updates to ensure subsequent recommendations reflect their latest preferences. This real-time adaptation capability ensures that the recommendation experience continuously improves as users interact with the platform.

The effectiveness of our recommendation system is evaluated through multiple complementary metrics that capture different aspects of user satisfaction and engagement. Click-through rates measure the immediate appeal of recommendations, while completion rates and time-per-recommendation indicate whether users find recommended content genuinely valuable. Long-term metrics including user retention rates and subscription conversion provide insight into the system’s impact on overall platform success.

Beyond traditional engagement metrics, we employ LLM-based evaluation frameworks that assess recommendation quality across multiple dimensions including personal context alignment, discovery value, diversity contribution, and fundamental quality indicators. This comprehensive evaluation approach ensures that our optimization efforts improve genuine user satisfaction rather than simply maximizing narrow engagement metrics.

The measurement framework also includes user feedback mechanisms that allow direct quality assessment through rating systems and explicit feedback collection. This human feedback serves both as a training signal for our algorithms and a validation mechanism for our automated quality assessments.

The described recommendation system represents a significant step forward in personalized content discovery, but it’s designed as a foundation for even more sophisticated future capabilities. Planned enhancements include integration with large language models for natural language recommendation interfaces, advanced content segmentation for granular recommendations within longer content pieces, and cross-platform recommendation capabilities that understand user preferences across different content consumption contexts.

The system’s modular architecture enables continuous enhancement and experimentation with new recommendation techniques while maintaining stable, high-quality user experiences. As we gather more user data and refine our understanding of content consumption patterns, the system will evolve to provide increasingly sophisticated and valuable recommendations that truly understand and serve individual user needs.

This approach to recommendation system design demonstrates that effective personalization requires deep understanding of both content and users, sophisticated technical implementation, and continuous adaptation based on real-world usage patterns. By focusing on the fundamental problems users face in content discovery and building flexible, evolving solutions, we can create recommendation experiences that genuinely enhance rather than simply automate the content discovery process.

May 20, 2024

Leverage generative AI capabilities for creative script ideation and video ad creation. (Worked on agentic workflows and interface optimization) https://ads.tiktok.com/business/copilot/standalone?locale=en&deviceType=pc

Credits: TikTok Creative Team

The transition from LLMs to Agents has become a consensus in the AI community, representing an improvement in complex task execution capabilities. However, helping users fully utilize Agent capabilities to achieve tenfold efficiency gains requires careful workflow design. These workflows aren’t merely a presentation of parallel capabilities, but seamless integrations with human-in-the-loop quality assurance. This document uses Typeface as a reference to explain why a clear primary workflow is necessary, as well as design approaches for functional extensions.

Google held its Google Cloud Next conference from April 9-11, announcing products like Google Vids, Gemini, Vertex AI, and related updates.

From a consumer product perspective, despite Google releasing many products, they were relatively superficial (Google Vids, Workspace AI, etc.). Examples like their Sales Agent demonstration were awkward in workflow, similar to Amazon Rufus. However, the enhanced data insight capabilities enabled by long context windows are becoming a confirmed trend.

From a business product perspective, while Google showcased many Agent applications built on Gemini and Vertex AI and emphasized their powerful functionality, they glossed over the difficulties of actual deployment. Currently, both large tech companies and traditional businesses face challenges in implementing truly effective workflows.

LLMs deliver not just tools, but work results at specific stages of a process. Application deployment can be viewed as providing models with specific contexts and clear behavioral standards. The understanding and reasoning capabilities of LLMs can be applied to various scenarios; packaging general capabilities as abilities needed for specific positions or processes involves overlaying domain expertise with general intelligence.

We should look beyond ChatBot and Agent dimensions to view applications from a Workflow perspective. What parts of daily workflows can be taken over by LLMs? If large models need to process certain enterprise data, what value does this data provide in the business? Where does it sit in the value chain? In the current operational model, which links could be replaced with large models?

Content that has reached consensus:

Most companies (A12Labs, Anthropic, etc.) are now developing Task Specific models and Mixture of Experts architectures. The MoE architecture has been widely applied in natural language processing, computer vision, speech recognition, and other fields. It can improve model flexibility and scalability while reducing parameters and computational requirements, thereby enhancing model efficiency and generalization ability (Mixture of Experts Explained).

The MoE (Mixture of Experts) architecture is a deep learning model structure composed of multiple expert networks, each responsible for handling specific tasks or datasets. In an MoE architecture, input data is assigned to different expert networks for processing, each returning an output structure, with the final output being a weighted sum of all expert network outputs.

The core idea of MoE architecture is to break down a large, complex task into multiple smaller, simpler tasks, with different expert networks handling different tasks. This improves model flexibility and scalability while reducing parameters and computational requirements, enhancing efficiency and generalization capability.

Implementing an MoE architecture typically requires the following steps:

Define expert networks: First, define multiple expert networks, each responsible for handling specific tasks or datasets. These expert networks can be different deep learning models such as CNNs, RNNs, etc.

Train expert networks: Use labeled training data to train each expert network to obtain weights and parameters.

Allocate data: During training, input data needs to be allocated to different expert networks for processing. Data allocation methods can be random, task-based, data-based, etc.

Summarize results: Weight and sum the output results of each expert network to get the final output.

Train the model: Use labeled training data to train the entire MoE architecture to obtain final model weights and parameters.

At the Gemini 1.5 Hackathon at AGI House, Jeff Dean noted the significant aspects of Gemini 1.5: 1 Million context window, which opens up new capabilities with in-context learning, and the MoE (Mixture of Experts) architecture.

Writesonic (https://writesonic.com) uses GPT Router for LLM Routing during AI Model Selection.

GPT Router (https://github.com/Writesonic/GPTRouter) allows smooth management of multiple LLMs (OpenAI, Anthropic, Azure) and Image Models (Dall-E, SDXL), speeds up responses, and ensures non-stop reliability.

from gpt_router.client import GPTRouterClient

from gpt_router.models import ModelGenerationRequest, GenerationParams

from gpt_router.enums import ModelsEnum, ProvidersEnum

client = GPTRouterClient(base_url='your_base_url', api_key='your_api_key')

messages = [

{"role": "user", "content": "Write me a short poem"},

]

prompt_params = GenerationParams(messages=messages)

claude2_request = ModelGenerationRequest(

model_name=ModelsEnum.CLAUDE_INSTANT_12,

provider_name=ProvidersEnum.ANTHROPIC.value,

order=1,

prompt_params=prompt_params,

)

response = client.generate(ordered_generation_requests=[claude2_request])

print(response.choices[0].text)

Content that is still not determined:

What constitutes a reasonable workflow remains to be determined. Some scenarios, like Amazon Rufus shopping guidance (where users need to converse before selecting products), differ significantly from existing user workflows and fail to provide efficiency improvements. -Verge

Many companies conducting needs validation are choosing customer profiles too similar to themselves or their friends, so the authenticity of these needs remains questionable. Additionally, existing AI product business models are trending toward price wars at the foundational level, with unclear differentiation at the application layer. -Google Ventures

AutoGPT represents the vision of accessible AI for everyone, to use and build upon. Their mission is to provide tools so users can focus on what matters. https://github.com/Significant-Gravitas/AutoGPT

A GPT-based autonomous agent that conducts comprehensive online research on any given topic. https://github.com/assafelovic/gpt-researcher

The advertising and marketing industry is one of the business sectors where AIGC is most widely applied. AI products are available for various stages, from initial market analysis to brainstorming, personalized guidance, ad copywriting, and video production. These products aim to reduce content production costs and accelerate creative implementation. However, most current products offer only single or partial functions and cannot complete the entire video creation process from scratch.

Concept Design: Midjourney Script + Storyboard: ChatGPT AI Image Generation: Midjourney, Stable Diffusion, D3 AI Video: Runway, Pika, Pixverse, Morph Studio Dialogue + Narration: Eleven Labs, Ruisheng Sound Effects + Music: SUNO, UDIO, AUDIOGEN Video Enhancement: Topaz Video Subtitles + Editing: CapCut, JianYing

User Need: Adjusting generation style through prompts before each generation is time-consuming and unpredictable. A comprehensive set of generation rules can help ensure that generated content consistently meets user needs, avoiding repeated adjustments. Example: Typeface Brand Kit

User Need: From a probability perspective, the accuracy of Agent Chaining decreases progressively. Setting up human-in-the-loop processes allows users to regenerate or fine-tune after each step, helping ensure final generation quality. Example: Typeface Projects (also includes Magic Prompt to assist with prompt generation)

User Need: Users want options. In existing generation processes, if users are dissatisfied with generated content, they need to refresh the generation, which is inefficient. Providing multiple options in a single generation can improve user experience. Example: Typeface Image Generator (also supports favoriting)

User Need: Currently, some users need to use 5-10 AI capabilities to complete advertising video creation. Most capabilities are disconnected, requiring frequent switching. By establishing a clear workflow, users can more efficiently invoke relevant tools to complete their creation. Example: Typeface Workflow (all capabilities presented at the appropriate stages)

Typeface was founded in May 2022, based in San Francisco. In February 2023, it received $65 million in Series A funding from Lightspeed Venture Partners, GV, Menlo Ventures, and M12. In July 2023, it completed a $100 million Series B round led by Salesforce Ventures, with Lightspeed Venture Partners, Madrona, GV (Google Ventures), Menlo Ventures, and M12 (Microsoft’s venture fund) participating. To date, Typeface has raised a total of $165 million, with a post-investment valuation of $1 billion. (Product positioning: 10x content factory)

Multiple Agent calls centered around the core document editing experience.

When users log into the Typeface homepage, they see four core functions in the left toolbar (Projects, Templates, Brands, Audience). The main page shows corresponding workflow options (Create a product shot, generate some text, etc.). The Getting Started Guide at the bottom of the main page provides guidance videos for certain use cases (Set up brand kit, repurpose videos into text) to help users understand how to invoke various capabilities.

When users click to enter the Brands page, they can set up multiple Brand generation rules, divided into 3 items:

When users click to enter the Projects page, they see a Google Doc-like interface storing multiple projects. Each project opens to a main document page with a resizable input bar at the bottom. Clicking the input bar presents options:

Additionally, users can select Refine to adjust generation language and tone (fixed options).

After clicking Create an image, users enter the image editing page with six integrated functions on the left: “Add, select, extend, lighting, color, effects, adobe express.” Users can generate and adjust images directly and favorite preferred generations.

The difference from Create an image is that Product shot includes specific products, while image isn’t necessarily product-related.

After clicking Generate text, users enter a prompt input field. Clicking the settings icon in the upper right allows setting Target Audience and Brand Kit. After generation, users can further adjust the prompt for a second generation, and selected content appears in the Project docs.

Typeface offers various generation templates. Users can search and select from the Template library, which adjusts the input box according to the content template, like TikTok Script.

When generating content, users can select user profiles and set Age Range, Gender, Interest or preference, and Spending behavior (with fixed options).

These integrations allow users to create in their familiar workspaces, avoiding the friction of cross-platform collaboration.

https://www.typeface.ai/product/integrations

Integration allows marketers to generate personalized content directly within Dynamics 365 Customer Insights, enhancing productivity and return on investment.

Users can generate multiple personalized creatives for audience-targeted campaigns, support scaling tailored content, and create variations for different target audiences.

Users can define audience segments with customer intelligence from BigQuery’s data from ads, sales, customers, and products to generate a complete suite of custom content for every audience and channel in minutes.

Users can streamline content workflows from their favorite apps and access content drafts within Google Drive, refine, rework, or write from scratch, and share with other stakeholders for quick approvals.

Create content in Teams using Typeface’s templates and repurpose materials or create new content. Make quick edits, such as improve writing, shorten text, change tone, and more, all within the Teams chat environment.

Workflows are not just about having various capabilities in the creation process, but also about chaining them together with appropriate GUI process specifications. Current marketing-focused products mostly integrate multiple stages of the creation process, providing workflow-like experiences for users, and reducing cross-platform collaboration friction through external integrations.

April 10, 2024

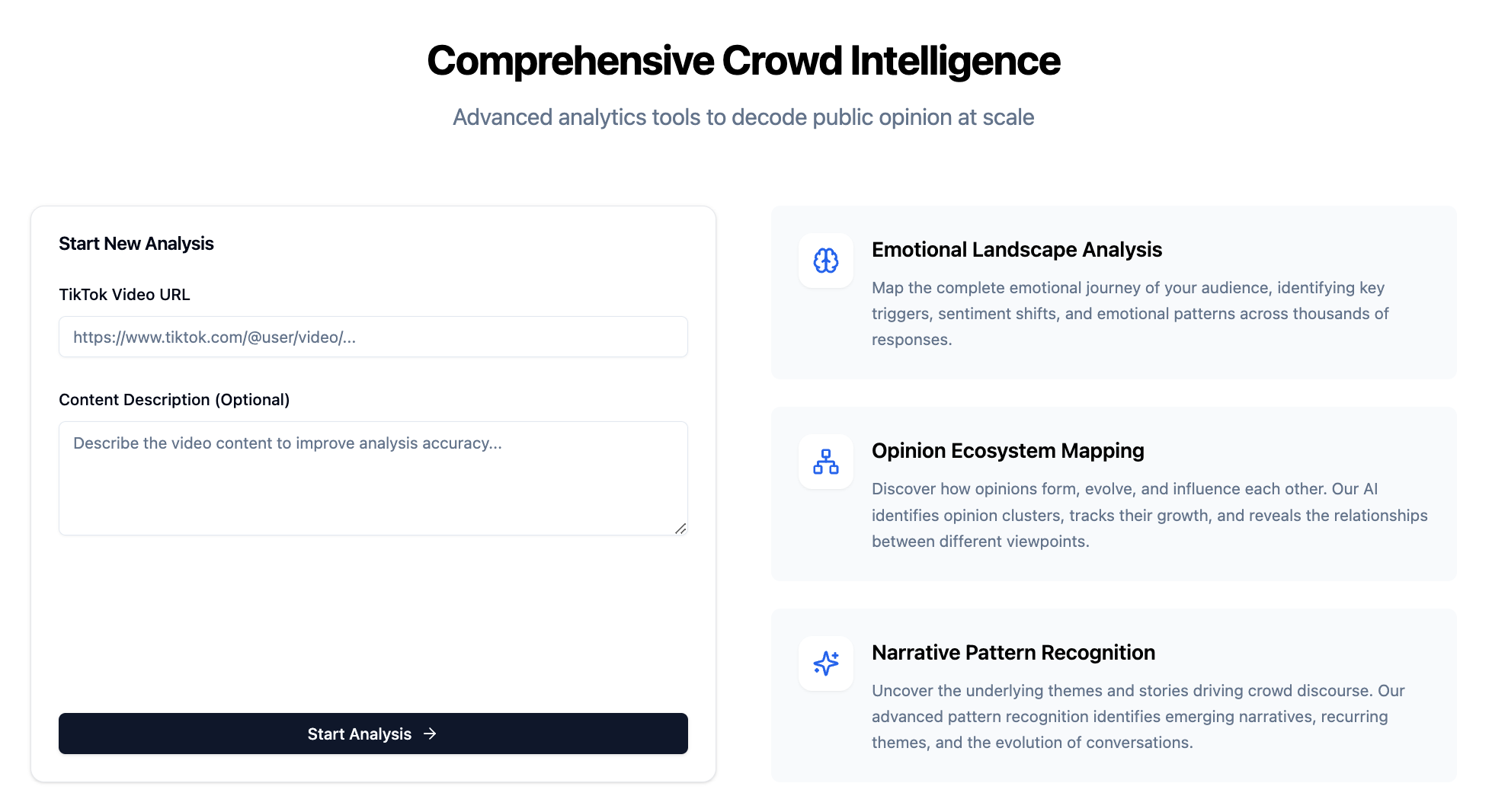

Analyze thousands of tiktoks to provide actionable trends & insights for key agencies. (Worked on multi-modal content understanding) To be released on TikTok Creative Center (https://ads.tiktok.com/business/creativecenter/pc/en)

Credits: TikTok Creative Team

Jun 3 - Excited to share that TikTok Insight Spotlight, a product I worked on during my time at TikTok, was officially unveiled at the company’s annual advertiser summit on June 3rd, 2025. The Verge covered the launch extensively, highlighting the AI-driven capabilities I helped develop.

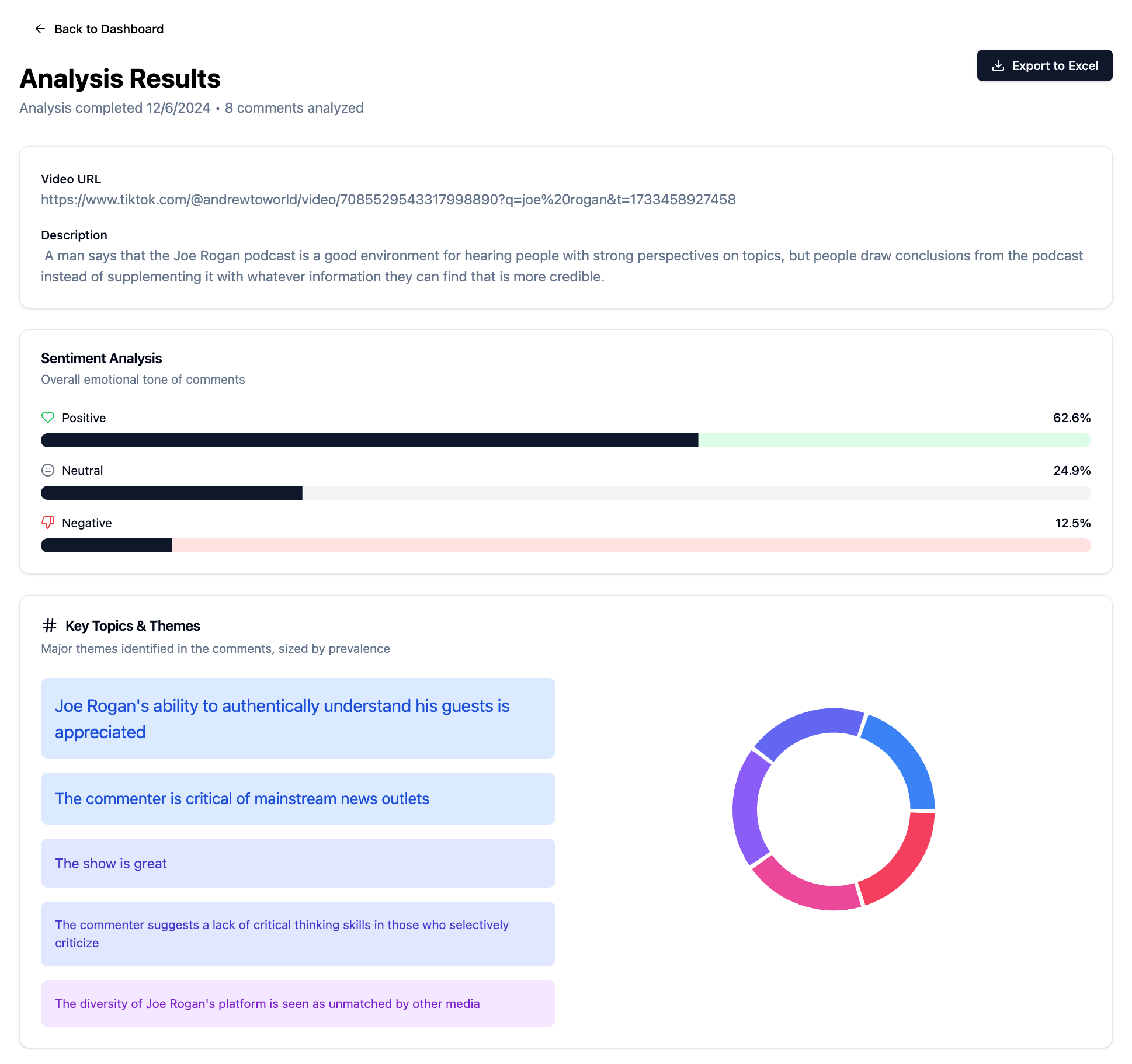

TikTok’s Insight Spotlight interface showing AI-generated analysis - a product I contributed to while at TikTok

TikTok’s Insight Spotlight interface showing AI-generated analysis - a product I contributed to while at TikTok

As The Verge reported: “TikTok introduced a slew of new advertiser tools at the company’s annual advertiser summit on June 3rd. The new products range from AI-powered ad tools to new features connecting creators and brands, but the overall picture is clear: advertiser content on TikTok is about to become much more tailored and specific.”

The article specifically highlighted the core functionality I worked on: “The company will give brands precise details about how their target audience is using the platform — including AI-generated suggestions on ads to run. Using a tool called Insight Spotlight, advertisers will be able to sort by user demographics and industry to see what videos users in the target group are watching and what keywords are associated with popular content.”

During my time at TikTok, I worked specifically on the multi-modal content understanding capabilities that power Insight Spotlight’s core functionality. Here’s how the features I helped develop work in practice:

The Verge highlighted a key example of the system in action: “In an example provided by TikTok, an AI-generated suggestion recommends that a brand ‘produce video content focused on ‘hormonal health’ for female, English-speaking users’ and to include a specific keyword.” This type of precise, data-driven recommendation is made possible by the multi-modal analysis systems I contributed to.

As The Verge noted: “Another feature in Insight Spotlight analyzes users’ viewing history to identify types of content that are bubbling up.” This capability stems from the advanced content understanding work I did, which enables the system to analyze both visual and audio elements of videos to predict emerging trends before they become mainstream.

The article captures the significance of this shift: “TikTok rose in prominence partly because of its spin-the-wheel, seemingly random quality: anything or anyone could go viral overnight. Brands did their best to keep up by jumping on trends, using popular formats and songs, and partnered with influencers who seemed to be at the center. The new tools will give advertisers even more ways to specifically tailor their content toward what is already happening on the platform and what people are searching for and watching.”

My work on multi-modal content understanding was focused on making this transition from random virality to predictable, data-driven insights possible. By analyzing thousands of TikToks across visual, audio, and textual dimensions, we created a system that can identify patterns and provide actionable recommendations to advertisers.

The Verge perfectly captured the broader vision: “In this way, advertiser tools are TikTok’s equivalent of search engine optimization (SEO) — flooding the zone with content that attempts to capture organic user behaviors. The idea that TikTok can be used as a search engine has been around for several years, and the company says that one in four users search for something within 30 seconds of opening the app.”

This transformation from entertainment platform to search-driven insights tool represents our team’s work - using AI to understand not just what content performs, but why it performs and how brands can leverage those insights.